In the last several months, Google has noticeably stepped up its artificial intelligence AI game to compete with ChatGPT and others. However, it is Google's latest release, NotebookLM -- still considered to be in its "Experiment," or beta, phase -- that is truly a showstopper.

Your ability to access NotebookLM may depend on your Google Admin's controls, as well as the typical slow rollout of new Google apps to domains. As I've done PD and demonstrated the tool over the last few weeks, I'd say half of the school districts in the room could use it, and half could not. If you currently cannot access it, check back weekly and/or put in a ticket for your head IT admin. (Like many optional Google products, your Google admin can choose whether only teachers should access it or if/when students can.)

How does it work?

Using NotebookLM requires being logged into your Google account. After going to the platform's website, you are asked to create a new Notebook or play with several pre-made examples. (After you create your first Notebook, you will go straight to a page where you can edit/view your Notebooks, create a new one, or explore the examples.) From the beginning, it's important to point out that Notebooks created in educational domains may not be able to be shared or viewed outside your own district -- at least for now. (Interestingly, that's not an issue for Notebooks made by a personal Google account.)

For a new Notebook, the first step is sharing resources. Those fall in four buckets: uploading a file from your hard drive (for example, a PDF, TXT or MP3), sharing a Google file from your Drive (currently, only Google Docs and Slides), a URL of a website or YouTube video, or pasting some copied text. There is a limit of fifty (!) sources you can share for a single Notebook.

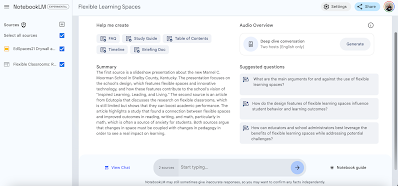

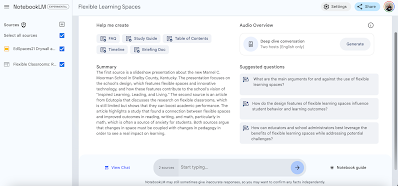

After some seconds of analyzing your sources, you land on the "home" page of your Notebook, called the "Notebook Guide" (see the blue asterisk/text in the lower right, where you can switch back and forth from this to your "Notes" page). At this point, you can edit the name of the Notebook at the top. At the bottom, you see a field to put in your own questions or tasks about your sources. It's important to note this is not a search engine; you're using the power of Gemini AI to delve into just your chosen sources! You also see four other choices for exploration:

- Help Me Create: several buttons to autogenerate certain structures related to your sources, such as a FAQ, Table of Contents, and a Study Guide. Note that some of these, such as the Table of Contents, actually generates a ToC for each source, not everything you've attached. Generated content goes on your "Notes" page.

- Summary: An overview of your sources.

- Suggested Questions: Notebook suggests three possible inquiries of your sources using Gemini AI to answer if clicked. These can become part of your "Chat History" alongside any other custom questions or tasks you posit; this history is viewable by clicking the lower left text ("View Chat").

- Audio Overview: this feature is too mind-blowing to just whiff over, so more on it in a moment.

On the Notes page, you see potential saved responses as discussed above, or you can "Add Note." At first, this may seem like a typical text note, but when completed, you can checkmark one or more of these notes and blue buttons will appear below to "Help Me Understand," "Critique," "Suggest Related Ideas," or "Create Outline." These are, of course, all AI powered. Note the Gemini-powered input bar to type questions or tasks remains below as before.

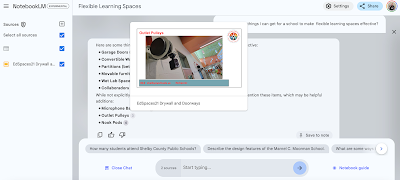

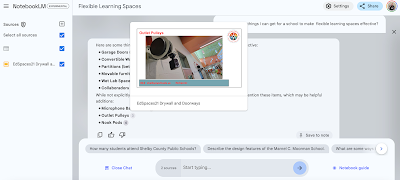

Are you ever annoyed that AI provides output of info but you don't know where it came from? That was an early complaint I had about ChatGPT (although to be fair, some later genAI like Microsoft Copilot has such transparency built in). NotebookLM tackles this by not only adding footnotes to its outputted inquiries to show its source, but with a click, you can go to that part of the source document!

|

| Hover over the number, and its location in the source is previewed... |

|

| ...and if you click the number, the source appears on the left, going straight to where it occurs (in this case, a particular Slide). |

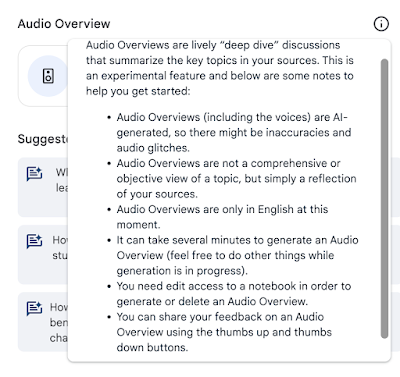

We now have to finally address Audio Overview. While all of the previously described aspects of NotebookLM are pretty impressive, it's Audio Overview that gets the most oxygen when people discuss the tool. In the upper right of your "Notebook Guide" page, hit "Generate" to create a "deep dive conversation." This can take a few minutes to render. Once ready, hit play, adjust the playback speed if you like...and I dare you not to close the lid of your laptop like I did, just to process what I just heard. Google pointedly does not call it a "podcast," but it's hard not to think of one when you hear two hosts who sound very much like a man and a woman talking about the sources you've attached -- complete with informal interjections, thoughtful pauses, stutters, human-like rise and fall of intonation, and even laughter. (It's also not a perfect simulation -- over the course of 8 to 10 minutes, there are several strange pronunciations, moments of flat affect, glitchy audio, and/or sound dropouts.) I've marveled at quite a bit of AI over the last few years, but this feature had me floored. Another interesting thing is that Google has apparently trained its AI on a significant amount of podcasts to recognize certain algorithms. For example, I created a Notebook around one PDF of how a teacher uses TTRPGs in his own classroom. I never explained what a TTRPG was in the PDF, as this was considered given schema in its original context. And yet, the Audio Overview took pains to define what a TTRPG is at the start, just as you would likely expect in a typical podcast for a general audience. At the end, it even dreamed of other ways you could use TTRPGs in other classrooms, although again, that was not anywhere in the original PDF. Last but not least, when the Audio Overview uses the second person, the audience is clearly "you" -- that is, the Google account who made the Notebook. It's not a human podcast, but it may be the world's first personalized learning, machine-generated podcast.

Remember that you cannot currently share Notebooks outside of your educational domain. However, click the three dots for the Audio Overview and you have the option to download it as a WAV file, which could then be shared however you like. (Again, rules are different for personal Google accounts; in fact, NotebookLM allows you to create a URL you can share so that anyone can hear your Audio Overview streaming within a browser.) Here's an example of an Audio Overview where my Notebook's sources included a Google Slides presentation I did at EdSpaces in November 2021 along with an Edutopia article.

Friends, we are in new AI territory. And remember, NotebookLM is currently beta!

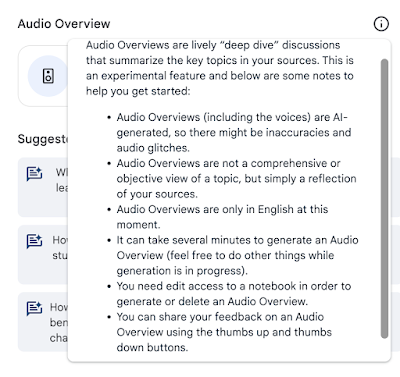

|

| Google provides some informational disclosures on the Audio Overview feature. The second bullet is particularly interesting, as I've already encountered "extra" information in my Audio Overviews. |

Some findings and tips that have emerged as I've done some early (and albeit limited) tinkering:

- When you originally begin to start adding resources to a new Notebook, the process is different depending on the kind of source. For example, if you select a hard drive file to upload, it will immediately begin building a Notebook after just one is selected; if it's a Google File, you could conceivably choose multiple Docs and Slides before Notebook creation starts.

- Once the Notebook is created, you can add or remove additional resources to your Notebook with the slideout panel from the left side. If you add or subtract any resources, however, the only way I've found to "reset" the Notebook with the updated info is to refresh and reload the entire tab.

- If you click on a single source on the left, Notebook will summarize just that one source; online articles are shown as a simplified version of the text.

- When connecting multiple sources, the amount of text/info in a long source may tend to dominate the others. For example, I uploaded a 180 page PDF alongside my deck of 40ish slides, and my slide deck wasn't even acknowledged in the Summary. When I uploaded the same slide deck with the Edutopia online article, both sources were singled out in the Summary and the outputs more balanced.

- When you leave and return to a Notebook, previously generated Audio Overviews are not immediately available to play, but have to be "retrieved" with a button push. This usually is resolved much faster than the original time it took to generate, however.

- Could you break Gemini's attempt to find patterns and summarize if the sources (at least to human eyes) were random and in no way related? Maybe. It might be worth the experiment to see how Notebook perseveres to make sense of such chaotic data.

How could you use it?

From the student side, NotebookLM gives them the opportunity to create a body of content that they can interrogate with inquiry. It clearly gives struggling students some scaffolding tools to wrestle with new material. Imagine how much easier research can be if you use AI to scan a long document for particular information! A teacher at a recent PD of mine pointed out that a teacher could create and share a Notebook as a kind of last minute "sub plans" activity -- have students interact with the notebook, including listening to the Audio Overview. Since we know AI is not 100% accurate (although the footnoted sources to inquiries are a welcome touch of assurance!), I did a "yes and" to that idea and recommended a reflective analysis where students look for flaws either in programming execution or in the facts presented (by reading/listening to/viewing the original sources), but most importantly, how did NotebookLM better help them understand the content?

Downsides?

The ability to currently share your Notebook only within your own educational domain is a frustrating limitation, although considering that not all domains even have access to NotebookLM yet, it makes sense. I also wish that if you clicked on a source in the left side panel, the source itself would open in a new tab (at least if it's a URL or a Google file). Lastly, I think a large "reset" button after sources are added/removed would be more intuitive for a user. Beyond these technical critiques (which, to be fair, may be addressed by the time NotebookLM goes alpha), AI makes us once again approach another instructional crossroads. GenAI has already proven itself able to write essays, poems, and songs; what does it mean for students to learn how to synthesize and find themes when they can upload their three social studies primary documents to NotebookLM and produce a summary and a "podcast" within seconds? The answer may be that NotebookLM can and will be used to produce first drafts where students analyze for errors and extend upon; to be a collaborative research partner; and models that students can learn from to imitate and improve.

I'll definitely be tracking this tool as it goes from "experimental" to full release in the months ahead!

This tool was first brought to my attention by Rebecca Simons of Murray State University's Kentucky Academy of Technology Education (KATE). KATE has been around since 1996, and is a wonderful resource for emerging edtech tools, as well as opportunities for PD. Consider joining the eduKATE community!